Let’s start with an official explanation of the term and what’s behind it. Don’t worry if you don’t understand it yet; we will explain it further.

Semantic segmentation is a computer vision technique that uses deep learning algorithms to assign class labels to pixels in an image. This process divides an image into different regions of interest, with each region classified into a specific category.

Now, let’s break down this concept step by step with a simple example of use.

Meet Alex, a young and enthusiastic urban planner. He has a big dream: to design smarter, more efficient cities. To understand what makes a city "smarter" or "more efficient," Alex needs to study how cities function. For example, he needs to distinguish between different types of land cover, such as buildings, roads, water bodies, and green spaces.

This helps him assess the amount of green space, evaluate vegetation health, and plan for the creation or preservation of parks and natural areas. Additionally, he can classify areas based on pedestrian usage, identify heavily used and underutilized spaces, and plan interventions to improve accessibility and safety, like adding benches, lighting, or pedestrian crossings.

These are just a few ways Alex can use data to make informed decisions and design better cities.

After understanding the goals, Alex starts thinking: Now what? How can he analyze a city? How can he make informed decisions about best practices and areas for improvement?

So his journey begins

Step 1: Understanding Semantic Segmentation

Semantic segmentation gives a computer the ability to see and understand images the same way humans do. Instead of just recognizing an entire image as a "cityscape" or "street view," it breaks down the image into tiny parts and labels each one. Every pixel in the image is assigned a category: this pixel is part of a road, that one is a building, and those over there are trees.

By using this technique, Alex can automatically categorize and label every pixel in each image. He can then use the labeled dataset to train a machine learning algorithm, which can gather valuable insights on how a city works. The algorithms analyze the labeled data, identify common city practices based on the labels, and return actionable results. These insights can inform urban planning decisions, optimize traffic management, and enhance public space design.

Step 2: Preparing the Data

Alex learns that to teach a computer to understand images, it needs a lot of examples. So, he gathers a dataset of city images as in the example above and organizes them into a folder. This process is known as the data collection step, which involves several challenges.

Note, that at this step Alex might have some challenges:

- It can be difficult to collect sufficient data, as privacy issues may arise when using images from certain sources.

- Additionally, finding the most useful data for training a deep learning model requires careful consideration.

- Alex also needs to filter out duplicated data to ensure the dataset's quality. With the data ready, the next step is to add labels to the objects in the images.

We will discuss these steps and their challenges in more detail in future articles.

Step 2: Labeling the Data

Alex uploads the folder with data into the Computer Vision Annotation Tool (CVAT.ai). Then he carefully labels each pixel in the images, categorizing elements like roads, buildings, and trees.

Alex can do it manually, or from the cloud storages.

For this task, Alex can use various tools: Polygons or Brush tool. Here is how it looks, when he adds buildings to one category, and pools to the other.

And here is what is going on behind the curtains at this very moment:

Semantic segmentation models create a detailed map of an input image by assigning a specific category to each pixel. This process results in a segmentation map where every pixel is color-coded according to its category, forming segmentation masks.

A segmentation mask highlights a distinct part of the image, setting it apart from other regions. To achieve this, semantic segmentation models use complex neural networks. These networks group related pixels together into segmentation masks and accurately identify the real-world category for each segment.

For example, all the pixels that belong to the object “pool” now belong to the “pool” category, and all the pixels that belong to the object “building” are assigned to the “building” category.

One key point to understand is that semantic segmentation does not distinguish between instances of the same class; it only identifies the category of each pixel. This means that if there are two objects of the same category in your input image, the segmentation map will not differentiate between them as separate entities. To achieve that level of detail, instance segmentation models are used. These models can differentiate and label separate objects within the same category.

Here is a video showing different types of the segmentation applied to the same image:

Step 3: Training the Model with Annotated Data

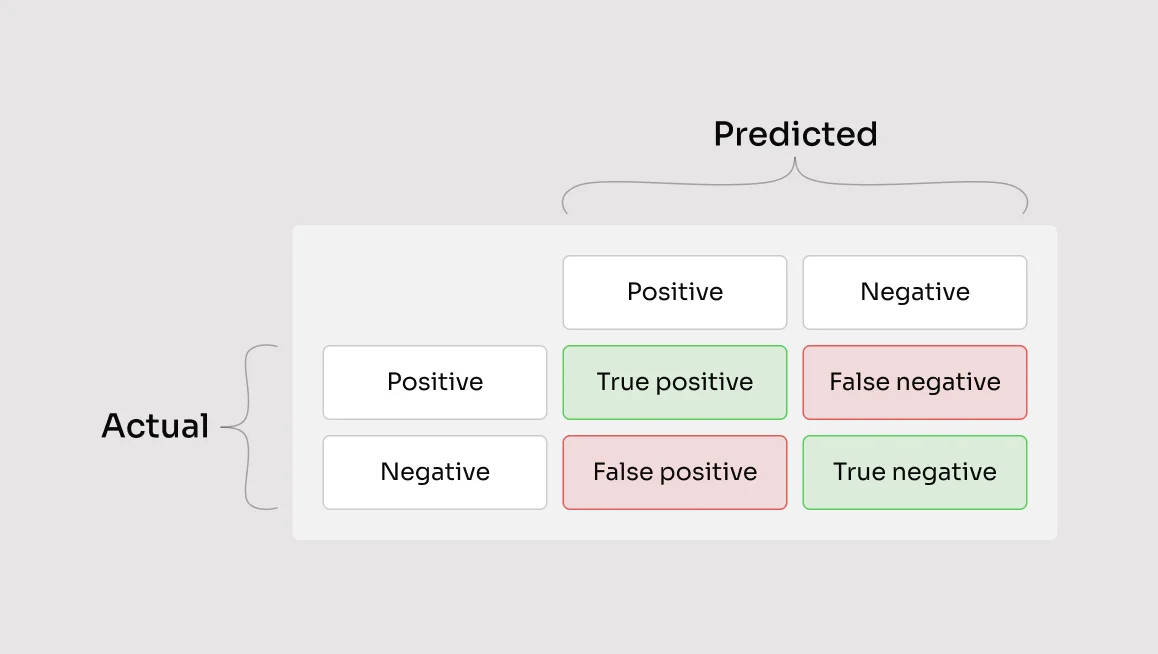

Once the annotation is complete, Alex exports the annotated dataset from CVAT.ai. He then feeds this labeled data into a deep learning model designed for semantic segmentation. Some examples are Cityscapes, PASCAL VOC and the very popular Yolo8. Models are usually evaluated with the Mean Intersection-Over-Union (Mean IoU) and Pixel Accuracy metrics.

After selecting and training the model, Alex runs it on new, unseen images to test its performance. The model, now trained with Alex's labeled data, can automatically recognize every object in the images and provide detailed segmentation results.

Here are some examples of how it may look:

Step 4: Gathering Insights

By analyzing the results from the model, Alex gathers valuable insights:

- Traffic Patterns: Improved traffic flow and reduced congestion by optimizing traffic light timings and road designs.

- Green Space Distribution: Identification of areas needing more green space and better urban planning for environmental health.

- Public Space Utilization: Enhanced public space planning to increase accessibility and usage.

- Infrastructure Development: Efficient monitoring of construction projects and better planning of new infrastructure.

- Urban Heat Islands: Implementation of cooling strategies to mitigate heat island effects.

Now Alex can make informed decisions, because he has processed data on hands.

Conclusion

Thanks to semantic segmentation, Alex can transform raw images into valuable insights without spending countless hours analyzing each one manually. The technology not only saves time but also enhances the accuracy of Alex’s work, making the dream of designing smarter cities a reality. In the end, semantic segmentation turns complex visual data into actionable knowledge and helps create a better urban environment for everyone.

And Alex couldn’t be happier with the results.

Not a CVAT.ai user? Click through and sign up here

Do not want to miss updates and news? Have any questions? Join our community:

.svg)

.png)

.png)

.png)