In the first part of this series of articles, we emphasized the need for precise annotation of images and videos, essential for developing AI products capable of performing accurate analyses, making predictions, and delivering reliable outcomes. We focused on how time-consuming and money-consuming solo annotation might be.

In this article, we will explore the costs and resources required to maintain an in-house team of data annotators.

But before we jump into the topic, here's a reminder of our use case:

A lead robotics scientist is creating a smart home assistant robot to differentiate between dirt and valuable items in a household setting. Life's chaos often includes scattered toys, misplaced glasses, pet fur, and god knows what else. The proposed robot would clean efficiently and help locate lost items, adding a layer of functionality beyond standard home cleaning devices. This can help elderly people keep track of their belongings, for example.

As the lead scientist, you play a crucial role in this project. Along with your small research team, you've compiled a dataset of 100,000 images showing various room settings with items scattered on the floor. According to publicly available data, this dataset size is typical for robotics projects, which can range from thousands to millions of images.

Each image features an average of 23 objects, so the task involves annotating approximately 2.3 million objects. This series presents various strategies to tackle this significant annotation task, including DIY methods, building an in-house team, outsourcing, and crowdsourcing.

Welcome to part two of our series on the costs of data annotation. This article describes the cost of hiring annotators and building the annotation team yourself.

- Case 1: You handle the task yourself or with minimal help from colleagues.

- Case 2: You hire annotators and annotate with your team.

- Case 3: You outsource the task to professionals.

- Case 4: Crowdsourcing.

Case 2: You hire annotators to annotate with your team

Now, as with anything else on this planet, there are pros and cons to having an annotation team. Let's start with the advantages and address questions about the time required for annotation and the cost-effective impact of this approach.

Here, we will calculate only the monthly expenses and costs. The minimum team to annotate 2.3 million objects consists of 35 annotators, supported by management personnel involved in onboarding, offboarding, and upskilling annotators.

For these 35 annotators, one manager and 3-4 senior annotators are necessary to guide the team.

Contracts and Team Size

Data annotation teams vary in size from small (up to five members) to large groups, with larger teams requiring more coordination and management.

Recruiting is straightforward for small teams, but complex for larger groups. Annotators may be full-time employees with fixed salaries or contractors. Contractors, however, pose challenges in retention and engagement due to their involvement in multiple projects and expectation for workload-aligned compensation.

When working with contractors, as we do, extra effort is necessary to ensure availability. For instance, if you need 35 annotators, consider hiring between 60 to 70 to account for potential unavailability.

Time and Costs

From our experience the hiring process will take as much time as:

- Time to find a data annotation manager: 1 month or more

- Time to find one annotator: Up to 1 month

- Time to onboard one annotator: Up to 1 month

You can conduct job interviews and onboarding concurrently. If you're fortunate, you might be able to hire between 5 to 10 annotators per month. But to hire and train a big data annotation team you need to have at least 3-4 months.

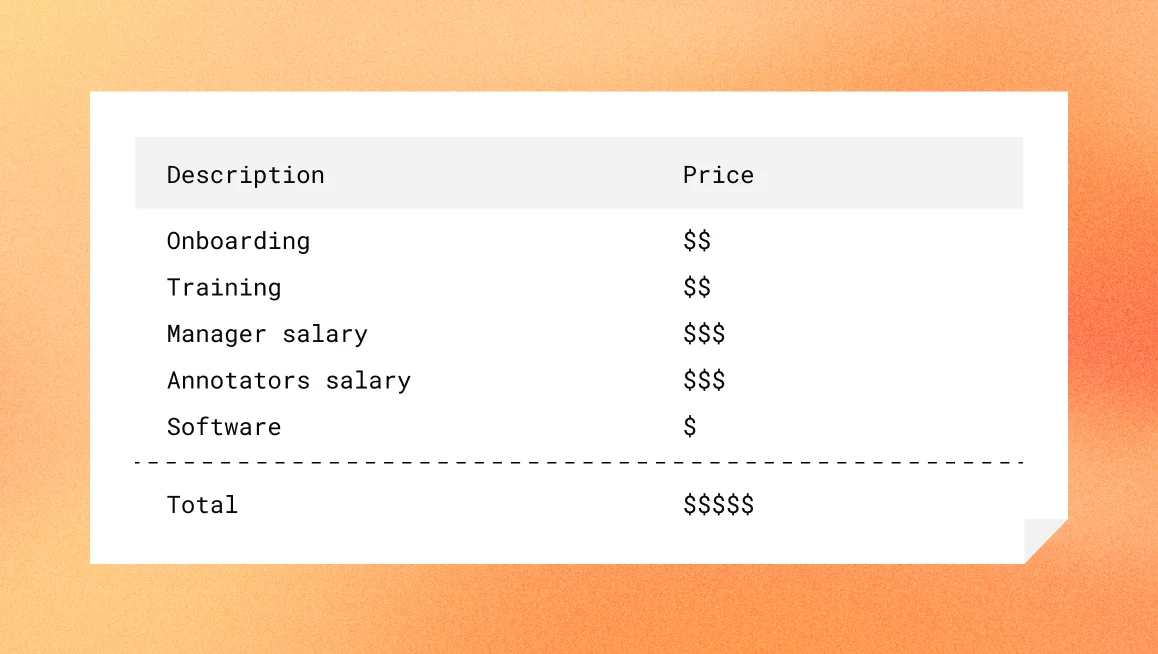

Expenses wise it will be:

- Manager salary (per month): Up to $6000 (data from Indeed, June 2024)

- Annotators Salary (per hour): It depends on whether you can afford to hire abroad. If yes, starting from $1/h and up to $40 if you hire in the US or high level of qualification is required.

- Where to look for them? On Upwork, Indeed, LinkedIn—you name it. Again, the job posting price ranges from $0 to $500, in rare cases, with the help of the recruitment agency.

Yes, if your service is as popular as platforms like CVAT.ai in the data annotation area, you can significantly reduce time and costs. Annotators will eagerly respond to your posted vacancies as soon as they are advertised.

Set Up Time

Next step is to prepare data: the dataset is the foundation of any robotics project.

For this project, the scientist must sift through a vast collection of video footage to select relevant frames and then craft a comprehensive data annotation specification. In our case, this specification is planned to cover 40 different classes, each to be annotated with polygons individually.

On average, the complete guideline is 30-50 pages. It will include detailed instructions for annotating each class, examples of correct and incorrect annotations, and edge cases. Drafting this detailed specification is time-consuming; it might take several weeks. The data annotation specification will be updated during the project because it isn’t possible to describe all corner cases from the beginning.

The time it takes to annotate each object with polygons will later be calculated, considering factors such as the object's complexity and size, the image's clarity, and the annotator's skill level.

- Simple Object (e.g., a rectangular object): 5-10 seconds

- Moderately Complex Object (e.g., a car): 30-60 seconds

- Highly Complex Object (e.g., a human with detailed limb annotations): 1-3 minutes or more

Operational Costs

In addition to onboarding and training costs, the expenses for data annotation projects also include licenses and instance costs per annotator. Each annotator may require a license for the annotation software used, which can vary significantly in price depending on the complexity and capabilities of the software.

In the case of CVAT it will cost you $33 per seat or you can use the free open-source tools.

Remember that even free tools require time and resources to set up and support; time is money. So, while we say "free," it means that you can download and install the open-source tool, but the rest depends on your time, expertise, and effort (and how much of your paid time will be spent on this).

Operational costs includes costs for accounting, contracts management and cannot be approximated, as they are company specific.

Final calculations

To calculate the total time required for 35 professional annotators to annotate 2,300,000 objects, where each object takes approximately 40 seconds in average to annotate, you can follow these steps:

Calculate the Total Time for All Objects:

- Total time = 2,300,000 objects × 40 seconds per object = 92,000,000 seconds or 25,555.56 hours

Divide by the Number of Annotators to Find Time per Annotator:

- Time per annotator = 25,555.56 hours / 35 annotators = 730.16 hours

So, if all annotators work simultaneously and efficiently, each annotator will need about 18.25 work weeks, which is approximately 4.2 months, to complete the annotation of all 2,300,000 objects.

To calculate the costs for the scenario described, let's break it down into its components and sum them up for the 4.2 months required for the project. We'll assume each annotator earns $550 per month and that there is a varying cost for licenses, from free to $33 per month. Additionally, management and validation cost is $6000 + 20% per month from the total cost of annotators.

Total Salary Costs for Annotators (4.2 months):

- Total annotator costs = $2,310 per annotator × 35 annotators = $80,850

Management and validation Fees (for 4.2 Months):

- Total cost for a data annotation manager = $25,200

- Management and validation Fees = $80,850 * 20% = $16,170

Conclusion: To annotate 100 000 images, that is 2,300,000 objects it will take 4.2 months and $122,220.

To this number you need to add costs of the software licenses.

Hidden and One Time Costs

When calculating how much an annotation team costs it might be a good thing to take in account a one-time cost like hiring time and efforts.

As we’ve mentioned before, assembling a data annotation team starts with recruiting a crucial step that sets the tone for the team's development and effectiveness. Organizations typically choose between outsourcing recruitment or handling it internally.

Time and Cost Estimates:

- Outsourcing Recruitment

- Time: Recruitment agencies can expedite the process, typically taking 2 to 6 weeks to secure a position.

- Cost: Agencies charge a fee based on the position's annual salary, usually 15% to 30%.

- Internal Recruitment

- Time: This method can take 4 to 8 weeks, depending on the efficiency of HR processes and candidate availability.

- Cost: Costs include job posting fees ($0 to $500) and internal HR labor (approximately $55,000 annually or $26 per hour).

The numbers provided are approximate and based on data from Indeed and LinkedIn; actual costs may vary and should be aligned with the company's internal processes. For example, at CVAT.ai, we have automated our hiring process, enabling us to recruit the best annotators on the market at competitive prices. We use Remote.com for onboarding candidates and are quite satisfied with this HR platform. Our annotators come from various countries, including Kenya, India, Nigeria, Ghana, Nepal, and Indonesia.

Considerations for Hiring Relatives

Small teams might consider hiring relatives for data annotation tasks. While this can add value in terms of trust and loyalty, it often leads to challenges such as the absence of professionalism and cost. Performance might not meet professional standards if the hiring criteria are not aligned with the job's technical demands.

Management Overhead

Post-recruitment, managing a data annotation team involves handling administrative tasks essential for maintaining AI development standards:

- Paperwork and Compliance: Managing contracts and compliance with labor laws.

- Financial Management: Overseeing accounts and payment systems.

- Work Environment Management: Providing training, managing workloads, and fostering a supportive work atmosphere.

Additional Considerations

- Technology and Tools: Investments in data management and annotation tools can enhance efficiency.

- Team Dynamics: The interaction between team members and management style significantly impacts productivity.

- Market Conditions: Economic factors and labor availability can influence recruitment and operational costs.

These elements are often seen as "hidden costs" and vary significantly by organization, affecting the overall expenses. They should be included in the final budget considerations due to their potential to impact the costs significantly.

Conclusion

What will be the total duration and costs of the entire project? Here, we are discussing the baseline minimal price, excluding hiring and hidden costs:

- Total Duration: 18.25 work weeks, which is approximately 4.2 months.

- Cost Range: The costs vary. They start from around $122,000 and may go up indefinetly, depending on team capacity and other factors, like where are you placed, do you hire locally or worldwide and so on.

What else should you take into account when reading this article?

- The calculation for the hiring process assumes linear and consistent recruitment and onboarding, which might not reflect real-world variations. Realistic scenarios may need buffer times for unexpected delays and additional costs for unplanned issues.

- The provided time and costs assume maximum efficiency. They may not account for variables such as sick leave, training efficacy, and turnover rates, which could significantly impact both time and cost estimates.

- Empirical data from similar past projects could further refine estimating the time taken to annotate objects and onboarding costs.

Overall, the presented figures are reasonable but should be treated as approximations with potential for variation based on real-world execution.And that’s all for today. See you in the next article, where we will discuss how much it costs to outsource the data annotation to professionals.

Not a CVAT.ai user? Click through and sign up here.

.webp)

.svg)

.png)

.png)

.png)